Chapter 4: Common Distributions

Discrete Distributions

Bernoulli Trials:

- A sequence of trials each having

- Two possible outcomes

- Constant probability of “success”

- Trials are independent

In the theory of probability and statistics, a Bernoulli trial is a random experiment with exactly two possible outcomes, “success” and “failure”, in which the probability of success is the same every time the experiment is conducted.

An example of this would be a coin toss, the probability doesn’t change each flip!

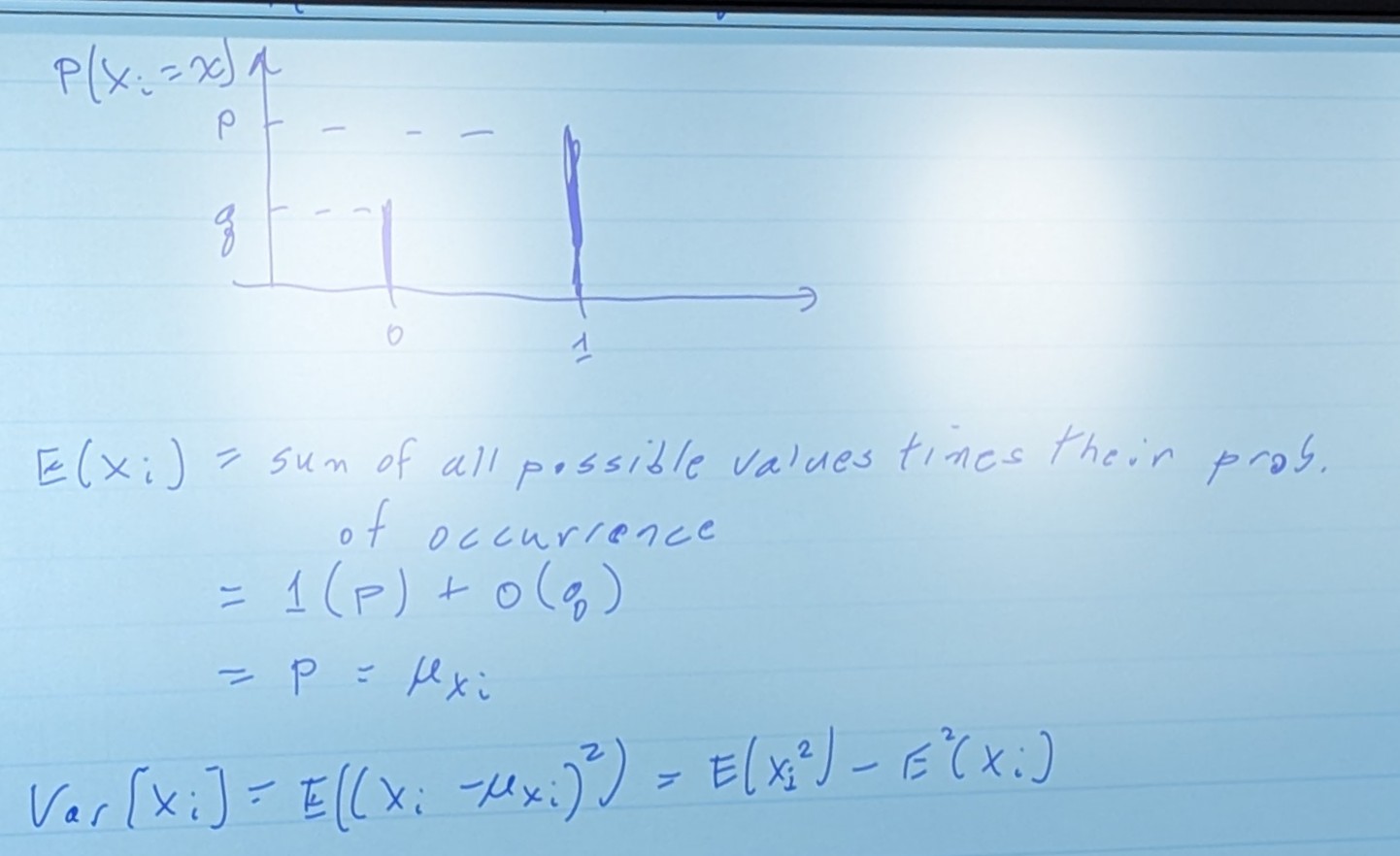

Let’s define an Xi here:

Xi = 1, if the ith trial is a “success” Xi = 0, if the ith trial is a “failure”

and let

P(Xi = 1) = p P(Xi = 0) = 1 - p = q

🡹 This here, is a Probability Mass Function (PMF)

Where E(Xi2) = (1)2p = p(1 - p) = pq

This leads to distributions:

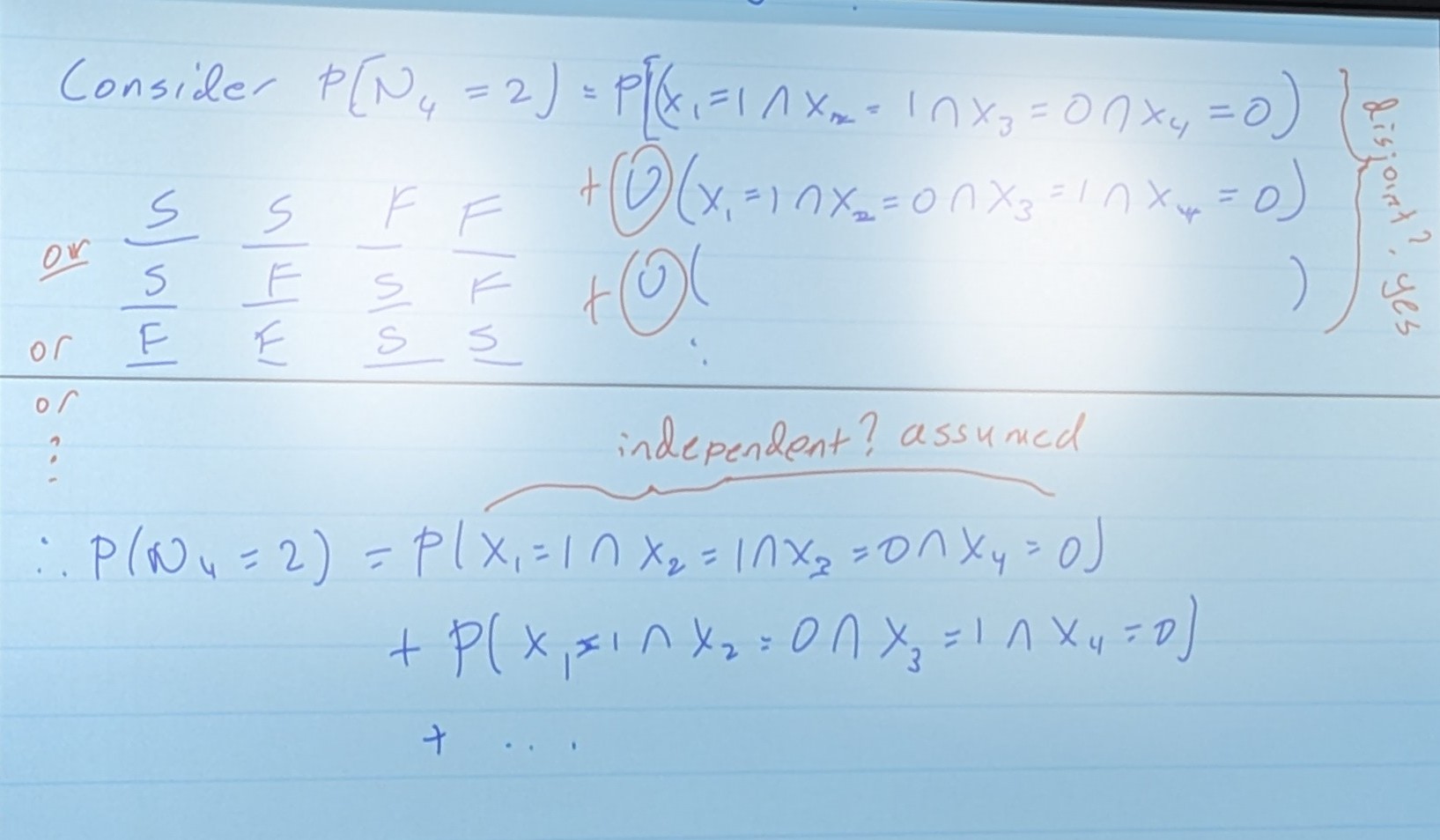

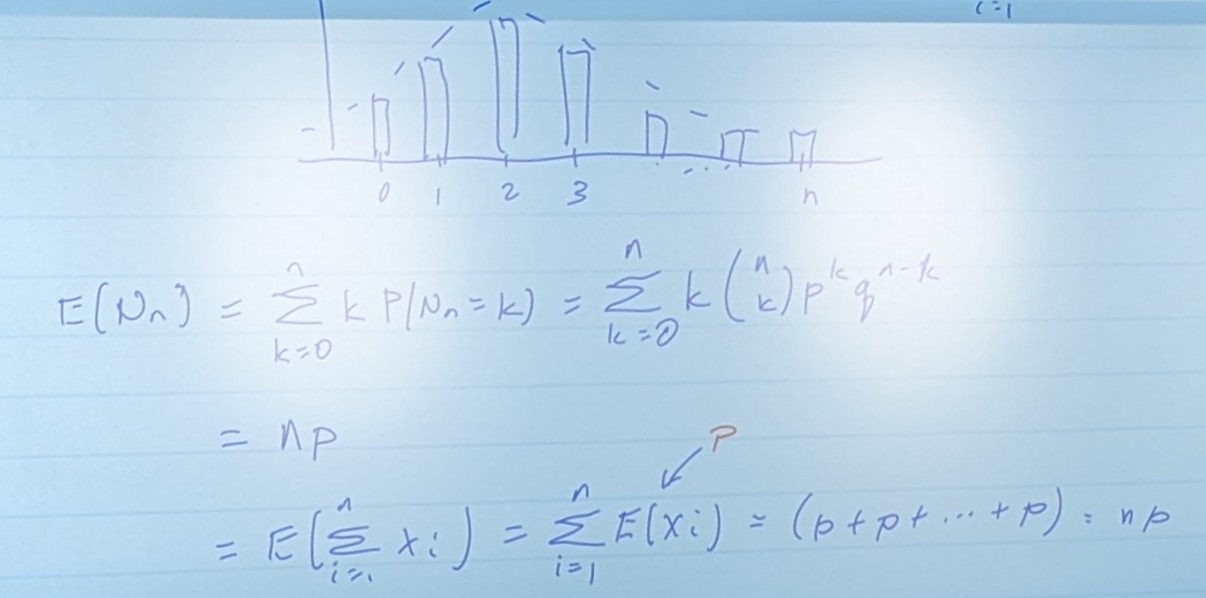

Binomial Distributions

i.e equalling

= number of “successes” in n trials.

= p2q2 + p2q2 + …

= (Four choose Two “4 over 2”) * p2+q2

General Formula for distributions

Varience for Bernoulli Trials

since Xi = pq

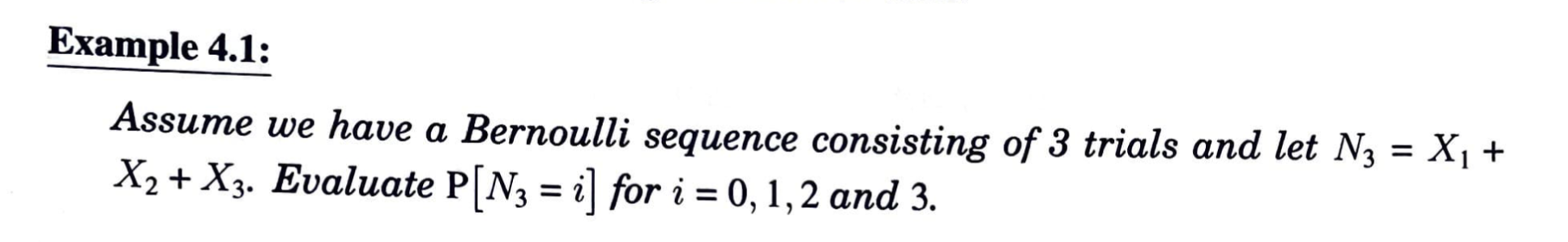

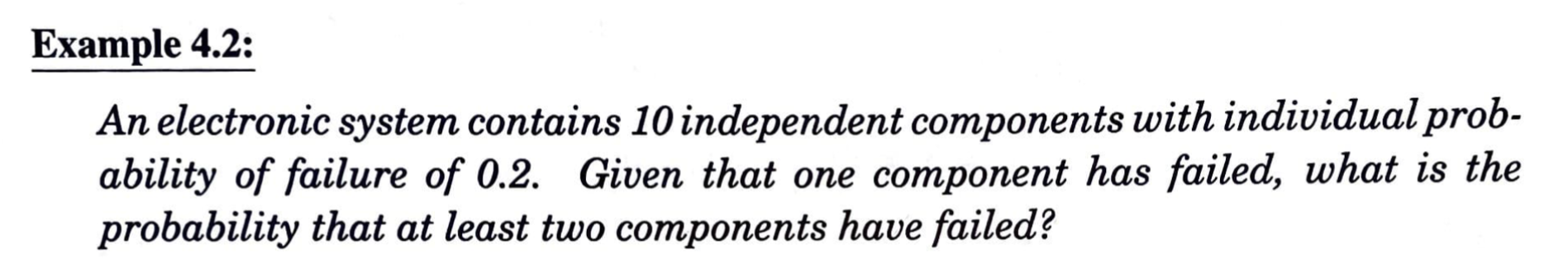

Example 1

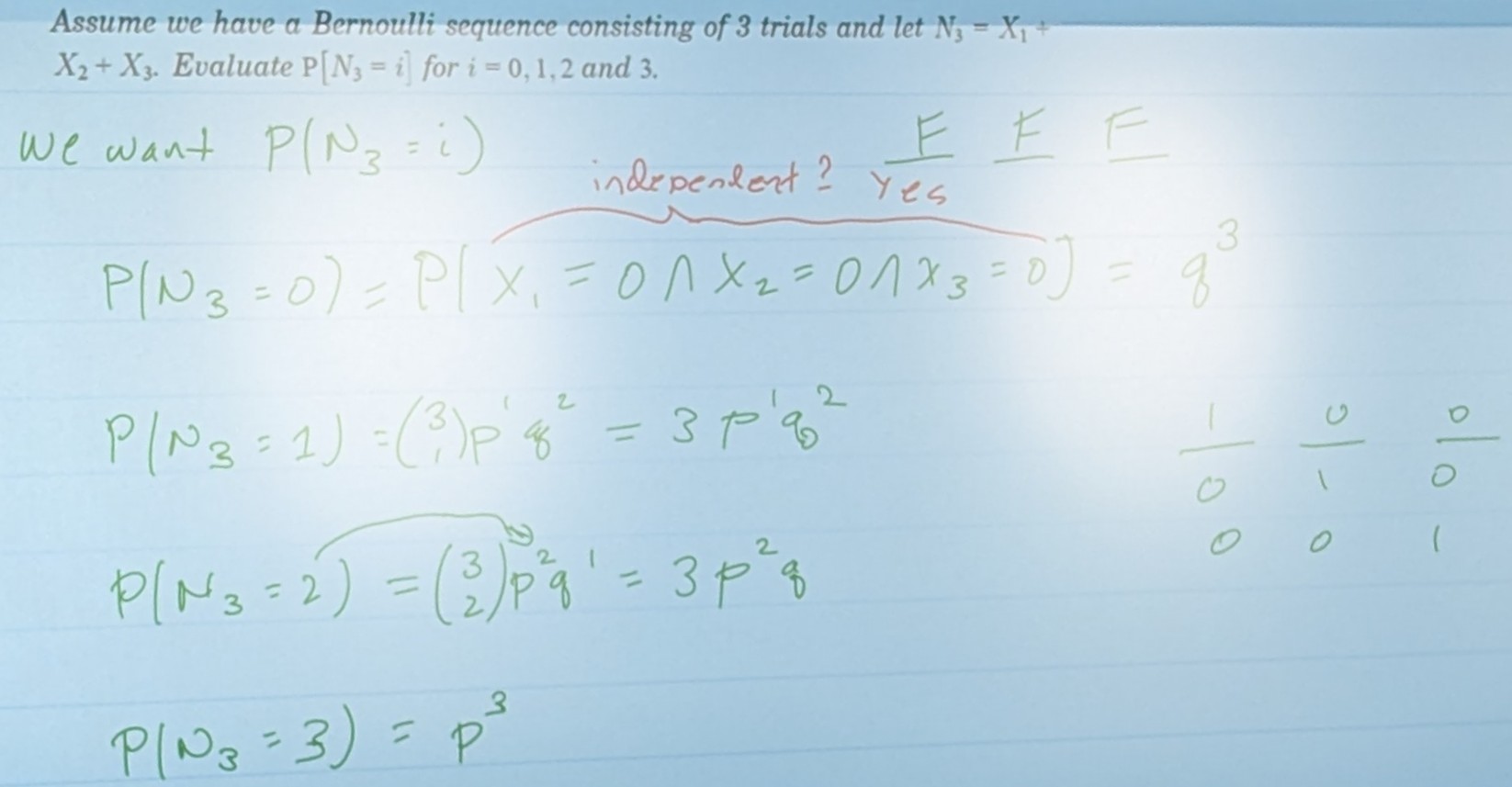

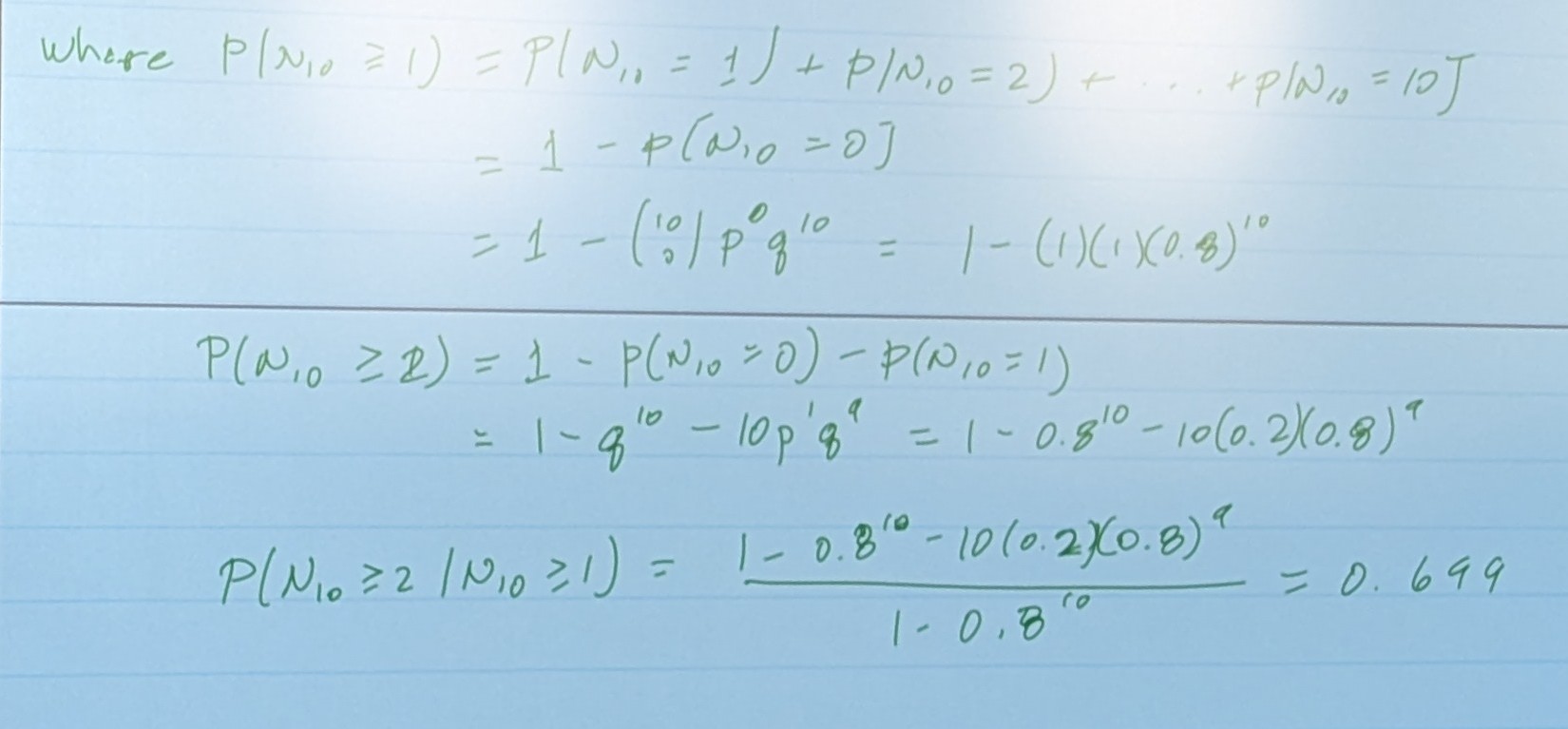

Example 2

This is a sequence of Bernoulli Trials. Because of this, we can assume independence. Without that piece, this is not solvable.

N10 = Number of failures in N trials

We want to find

Recall That...

So…

Worked out that is…

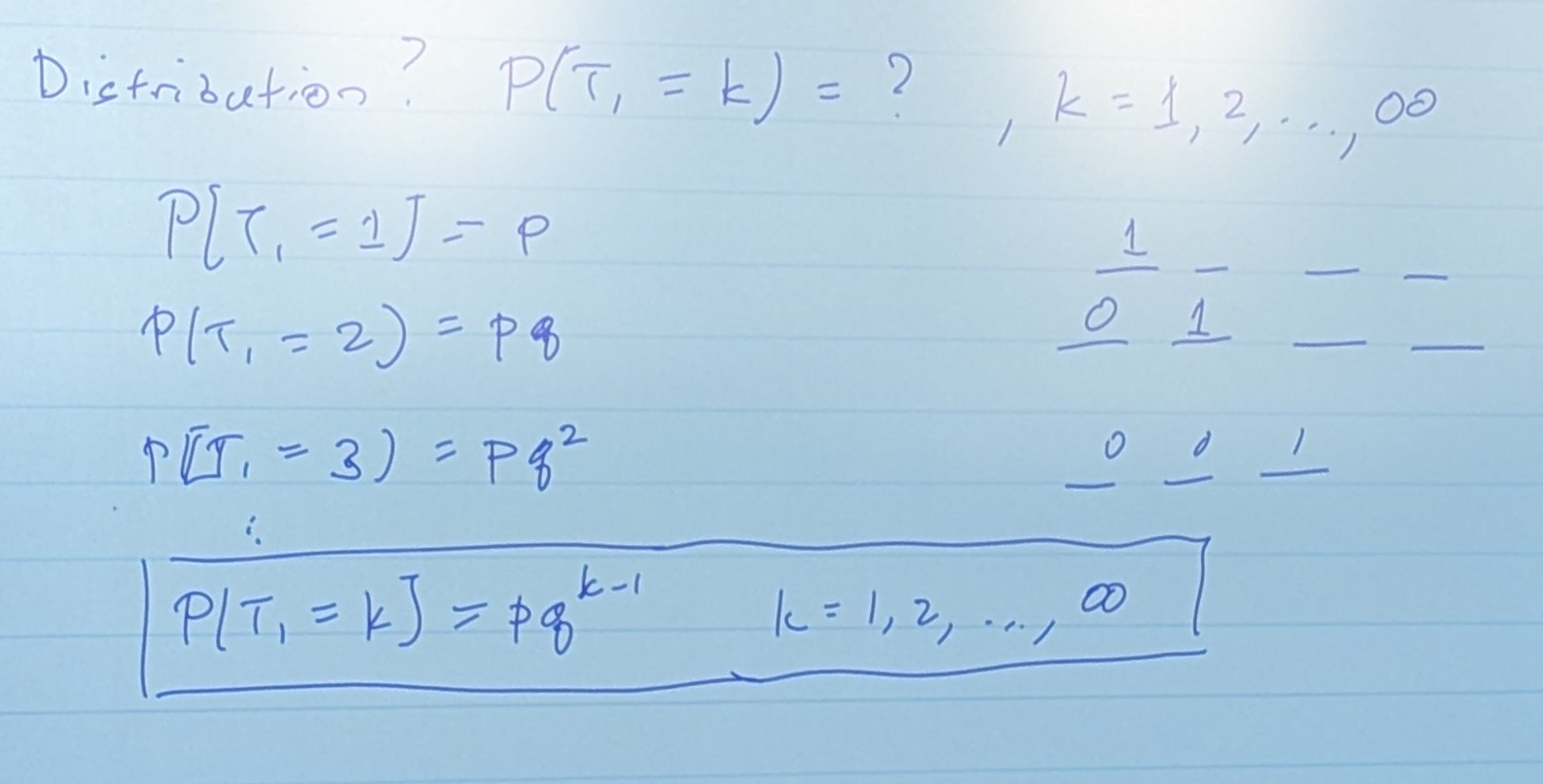

Geometric Distribution

Let T1 = Number of trials until the first (or next) “success”

Distribution?

P(T1 = K) = ?, K = 1,2,… , ∞